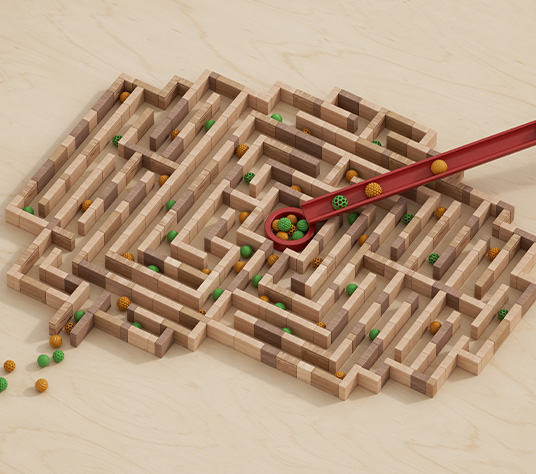

In the fast-paced world of E-commerce, inventory data is a goldmine of insights waiting to be unearthed. Imagine an online retailer with thousands of products, each with their own unique attributes, stock levels, and sales history. By efficiently ingesting and analyzing this inventory data, the retailer can optimize stock levels, predict demand, and make informed decisions to drive growth and profitability. As data volumes continue to grow and the complexity of data sources increases, the importance of efficient data ingestion becomes even more critical.

With advancements in artificial intelligence (AI) and machine learning (ML), the demand for real-time and accurate data ingestion has reached new heights. AI and ML models, require a constant feed of high-quality data to train, adapt, and deliver accurate insights and predictions. Consequently, organizations must prioritize robust data ingestion strategies to harness the full potential of their data assets and stay competitive in the AI-driven era.

Challenges with Existing Data Ingestion Mechanisms

While platforms like Snowflake offer powerful data warehousing capabilities, the native data ingestion methods provided by Snowflake, such as Snowpipe and the COPY command, often face limitations that hinder scalability, flexibility, and efficiency.

Limitations of the COPY Method

- Data Transformation Overhead: Extensive transformation during the COPY process can introduce overhead, which is better performed post-loading.

- Limited Horizontal Scalability: COPY struggles to scale efficiently with large data volumes, underutilizing warehouse resources.

- File Format Compatibility: Complex formats like Excel require preprocessing for compatibility with Snowflake’s COPY INTO operation.

- Data Validation and Error Handling: Snowflake’s validation during COPY is limited; additional checks can burden performance.

- Manual Optimization: Achieving optimal performance with COPY demands meticulous file size and concurrency management, adding complexity.

Limitations of Snowpipe

- Lack of Upsert Support: Snowpipe lacks direct upsert functionality, necessitating complex workarounds.

- Limited Real-Time Capabilities: While near-real-time, Snowpipe may not meet the needs for instant data availability or complex streaming transformations.

- Scheduling Flexibility: Continuous operation limits precise control over data loading times.

- Data Quality and Consistency: Snowpipe offers limited support for data validation and transformation, requiring additional checks.

- Limited Flexibility: Snowpipe is optimized for streaming data into Snowflake, limiting custom processing and external integrations.

- Support for Specific Data Formats: Snowpipe supports delimited text, JSON, Avro, Parquet, ORC, and XML (using Snowflake XML format), necessitating conversion for unsupported formats.

Tiger’s Snowpark-Based Framework – Transforming Data Ingestion

To address these challenges and unlock the full potential of data ingestion, organizations are turning to innovative solutions that leverage advanced technologies and frameworks. One such solution we’ve built, is Tiger’s Snowpark-based framework for Snowflake.

Our solution transforms data ingestion by offering a highly customizable framework driven by metadata tables. Users can efficiently tailor ingestion processes to various data sources and business rules. Advanced auditing and reconciliation ensure thorough tracking and resolution of data integrity issues. Additionally, built-in data quality checks and observability features enable real-time monitoring and proactive alerting. Overall, the Tiger framework provides a robust, adaptable, and efficient solution for managing data ingestion challenges within the Snowflake ecosystem.

Key features of Tiger’s Snowpark-based framework include:

Configurability and Metadata-Driven Approach:

- Flexible Configuration: Users can tailor the framework to their needs, accommodating diverse data sources, formats, and business rules.

- Metadata-Driven Processes: The framework utilizes metadata tables and configuration files to drive every aspect of the ingestion process, promoting consistency and ease of management.

Advanced Auditing and Reconciliation:

- Detailed Logging: The framework provides comprehensive auditing and logging capabilities, ensuring traceability, compliance, and data lineage visibility.

- Automated Reconciliation: Built-in reconciliation mechanisms identify and resolve discrepancies, minimizing errors and ensuring data integrity.

Enhanced Data Quality and Observability:

- Real-Time Monitoring: The framework offers real-time data quality checks and observability features, enabling users to detect anomalies and deviations promptly.

- Custom Alerts and Notifications: Users can set up custom thresholds and receive alerts for data quality issues, facilitating proactive monitoring and intervention.

Seamless Transformation and Schema Evolution:

- Sophisticated Transformations: Leveraging Snowpark’s capabilities, users can perform complex data transformations and manage schema evolution seamlessly.

- Adaptability to Changes: The framework automatically adapts to schema changes, ensuring compatibility with downstream systems and minimizing disruption.

Data continues to be the seminal building block that determines the accuracy of the output. As businesses race through this data-driven era, investing in robust and future-proof data ingestion frameworks will be key to translating data into real-world insights.