As the maritime industry navigates tremendous volumes of data, the call for accelerated digitalization is stronger than ever. The maritime sector is a vast and intricate ecosystem where data flows continuously across interconnected sectors—from vessel management and maintenance to fuel optimization and emissions control. As the United Nations Conference on Trade and Development highlighted in its 2024 report, digital transformation through technologies like blockchain, artificial intelligence, and automation is crucial for improving port operations. Ports that have embraced these innovations report reduced waiting times, enhanced cargo tracking, and greater efficiency in transshipment processes.

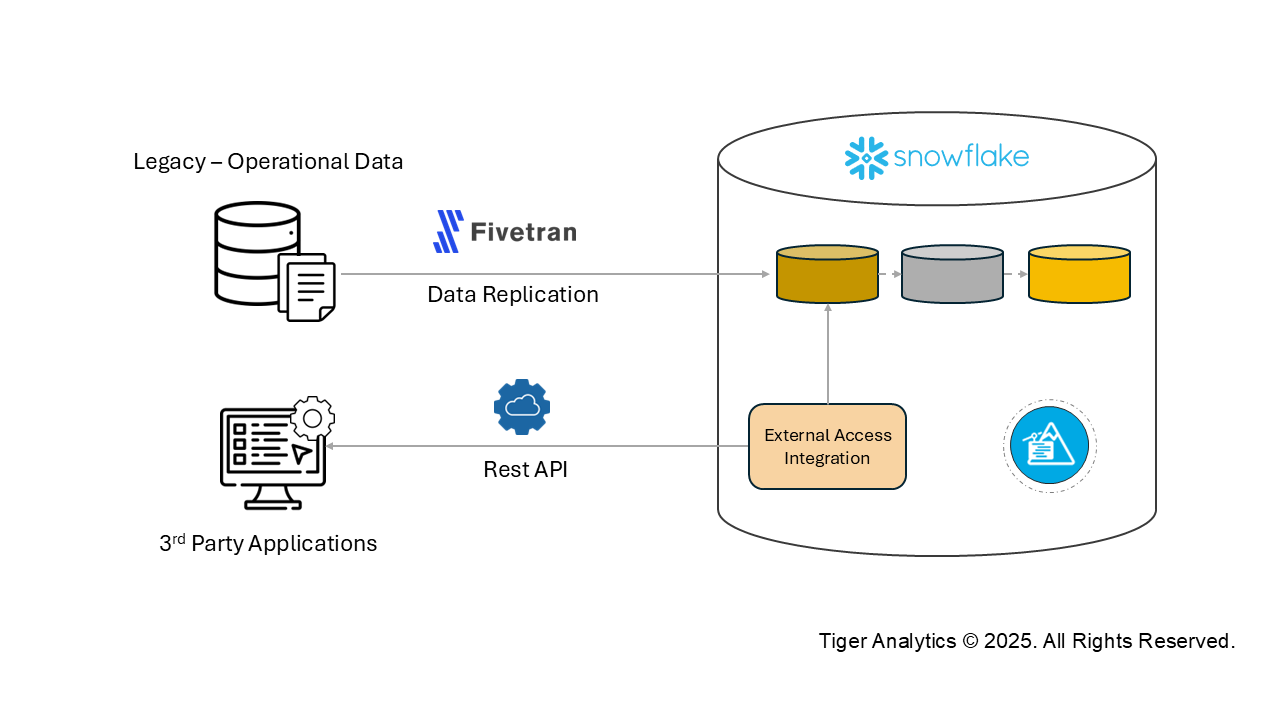

In this data-intensive environment, operational data from ship-installed software is just the beginning. Third-party sources such as AIS data, weather information, and other cloud applications play a vital role in many maritime use cases. Traditionally, integrating this diverse data—often accessed via REST APIs—required external platforms like AWS Lambda or Databricks.

With Snowflake’s introduction of the External Access Integration feature, maritime organizations can now consolidate API data integration and data engineering workflows within a single, powerful platform. This breakthrough not only simplifies operations but also improves flexibility and efficiency.

Let’s discuss a use case

Suppose we need to retrieve crew rest and work hours data from a third-party regulatory service to generate near real-time, period-specific compliance reports for all vessels managed by a ship manager. These details are made available to the business through REST APIs.

Landscape Dissection and Data Enablement

Let’s assume Snowflake is the chosen cloud data warehouse platform, with Azure serving as the primary solution for data lake requirements. Operational data for vessels from various legacy systems and other sources is integrated into Snowflake. Data pipelines and models are then built on this integrated data to meet business needs. The operational data is ingested into Snowflake through a combination of Snowflake’s native data loading options and the replication tool Fivetran.

Challenges Explained

Outbound REST API calls must be made to retrieve crew rest and work hours data. The semi-structured data from the API response will need to undergo several transformations before it can be integrated with the existing vessel operational data in Snowflake. Additionally, the solution must support the near real-time requirements of the compliance report. The new pipeline should seamlessly align with the current data pipelines for ingestion and transformation, ensuring no disruptions to existing processes.

We now explore Snowflake’s external access integration to address these challenges.

What is Snowflake’s External Access Integration?

Snowflake’s External Access Integration empowers businesses to integrate the data seamlessly from diverse external sources and networks, helping them bridge data gaps and providing a holistic view for better decisions. The feature gives users the flexibility to read external data and integrate only which is necessary for the use case while the majority of the data resides at the source. Key benefits of this feature include:

- Enabling real time access to complex third-party data providers

- Eliminating data duplication

- Enriching data with selective data integration that benefits your use case

- Enhanced data-driven decision making

Leveraging Snowflake’s External Access Integration: A Step-by-Step Guide

Here is a complete walkthrough of the procedures to solve our use case:

Step 1: Creating Network Rule

- Snowflake enables its accounts to selectively and securely access databases or services via its network rules. This enhances the security by limiting the list of IPs that can connect to Snowflake.

- CREATE NETWORK RULE command helps us to add the list of APIs that Snowflake account should connect to.

CREATE [OR REPLACE] NETWORK RULE <nw_rule_name> MODE = EGRESS TYPE = HOST_PORT VALUE_LIST = (<api_url_link>)

Step 2: Creating Secret

- Securely save the credentials to be used while authenticating to APIs via secrets in Snowflake.

- CREATE SECRET command is used to represent the credentials such as username and password, which are used to authenticate the API we have added to the network rule in step 1.

Basic Authentication CREATE [ OR REPLACE ] SECRET <secret_name> TYPE = PASSWORD USERNAME = '<username>' PASSWORD = '<password>'

Step 3: Creating External Access Integration

- Specify the network rule and secrets used to connect to the APIs via external access integration.

- CREATE EXTERNAL ACCESS INTEGRATION command aggregates the allowed network rule and secrets to securely use in UDFs or procedures.

CREATE [ OR REPLACE ] EXTERNAL ACCESS INTEGRATION <ext_integration_name> ALLOWED NETWORK RULES = <nw_rule_name> ENABLED = TRUE

Step 4: External Call

There are multiple methods to call external APIs – UDFs or procedures or direct calls from Snowflake Notebooks (Preview Feature as of now). Let’s explore Snowflake Notebooks to make external calls via Python. Snowflake Notebooks offer an interactive environment to code your logics in SQL or Python.

- To make API calls from a particular notebook, enable the created external access integration in step 3 in your notebook. This can be done from the ‘Notebook settings’ options available for the Snowflake notebooks.

- After importing required libraries, call the required APIs and save the response object.

- Leverage Snowflake Snowpark framework to operate on the data frames and save your results to Snowflake tables.

- Use Snowflake’s native functions to flatten and format the semi structured data that is mostly received as a response from the API calls.

- The transformed data via API can be further combined with the operational or modeled data in Snowflake.

Configuration: Creating a network rule and external access integration.

create OR replace network RULE NW_RULE_PUBLIC_API

mode = egress

type = host_port

value_list = ('geocoding-api.open-meteo.com')

create or replace external access integration EAI_PUBLIC_API

allowed_network_rules = (NW_RULE_PUBLIC_API)

enabled = true

Get API Request: Get requests for a public marine REST API

import requests

def get_data_from_marine_api():

url = f'https://geocoding-api.open-meteo.com/v1/search?name=Singapore&count=10&language=en&format=json'

headers = {"content-type": "application/json"}

response = requests.get(url,headers = headers)

return response

response = get_data_from_marine_api()

data = response.json()

data_frame = pd.json_normalize(data)

Using Snowpark: To save the RAW response to the Landing Zone table.

from snowflake.snowpark.context import get_active_session

session = get_active_session()

df1 = session.create_dataframe(data_frame)

df1.write.mode ("overwrite").save_as_table("RAW_GEO_LOCATIONS")

Using Snowpark: To flatten the JSON for further transformations and combine with operational data for further business rules and logics. This notebook can be orchestrated in Snowflake to synchronize with the existing data pipelines.

import snowflake.snowpark as snowpark

from snowflake.snowpark.context import get_active_session

from snowflake.snowpark.functions import col

from snowflake.snowpark.functions import *

session = get_active_session()

flatten_function = table_function("flatten")

geo_locations_raw = session.table("RAW_GEO_LOCATIONS")

geo_locations_tr = geo_locations_raw.join_table_function(flatten_function(geo_locations_raw["RESULTS"])).drop(["SEQ","PATH","RESULTS" "THIS","GENERATIONTIME_MS"])

geo_locations_trf = geo_locations_tr.select(col("index").alias("index"),col("VALUE")["country"].alias("country"),col("VALUE")["country_code"].alias("country_code"),col("VALUE")["longitude"].alias("long"),col("VALUE")["latitude"].alias("lat"),col("VALUE")["name"].alias("name"),col("VALUE")["population"].alias("population"),col("VALUE")["timezone"].alias("timezone"),col("VALUE")["elevation"].alias("elevation"))

geo_locations_trf.write.mode("overwrite").save_as_table("TR_GEO_LOCATIONS")

The Snowflake External Access Integration advantage

- Native feature of Snowflake which eliminates the need for moving data from one environment to another.

- Can be integrated into the existing data pipelines in Snowflake promptly and hence, allows for easy maintenance.

- Can use Snowflake’s Snowpark features and native functions for any data transformations.

- Snowflake’s unified compute environment decreases the cost and enhances the efficiency of data pipelines by reducing the latency.

- Users can not only call the REST APIs via Snowflake external access integration but also web services that are defined by SOAP protocols.

Below is sample code for calling SOAP-based services:

import requests

def get_data_from_web_service():

url = f'https://www.w3schools.com/xml/tempconvert.asmx'

headers = {"content-type": "application/soap+xml"}

xml ="""

<soap12:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap12="http://www.w3.org/2003/05/soap-envelope">

<soap12:Body>

<CelsiusToFahrenheit xmlns="https://www.w3schools.com/xml/">

<Celsius>20</Celsius>

</CelsiusToFahrenheit>

</soap12:Body>

</soap12:Envelope>"""

response = requests.post(url,headers = headers,data=xml)

return response

response = get_data_from_web_service()

print(response.content)

Summary

The maritime industry, like many others, is embracing digital transformation, driven by the increasing volume and variety of data from complex systems, sensors, agencies, and regulatory bodies. This shift opens new opportunities for leveraging data from diverse sources to drive advanced analytics and machine learning. Snowflake provides a robust platform to support these efforts, offering efficient integration capabilities and external access features that make it easy to handle data from REST APIs. Its flexibility and scalability make Snowflake a valuable tool in helping the maritime industry harness the power of data for improved decision-making and operational efficiency.