A 2024 report on data integrity trends and insights found that 50% of the 550 leading data and analytics professionals surveyed believed data quality is the number one issue impacting their organization’s data integration projects. And that’s not all. Poor data quality was also negatively affecting other initiatives meant to improve data integrity with 67% saying they don’t completely trust the data used for decision-making. As expected, data quality is projected to be a top priority investment for 2025.

Trusted, high-quality data is essential to make informed decisions, deliver exceptional customer experiences, and stay competitive. However, maintaining quality is not quite so simple, especially as data volume grows. Data arrives from diverse sources, is processed through multiple systems, and serves a wide range of stakeholders, increasing the risk of errors and inconsistencies. Poor data quality can lead to significant challenges, including:

- Operational Inefficiencies: Incorrect or incomplete data can disrupt workflows and increase costs.

- Lost Revenue Opportunities: Decisions based on inaccurate data can result in missed business opportunities.

- Compliance Risks: Regulatory requirements demand accurate and reliable data; failure to comply can result in penalties.

- Eroded Trust: Poor data quality undermines confidence in data-driven insights, impacting decision-making and stakeholder trust.

Manual approaches to data quality are no longer sustainable in modern data environments. Organizations need a solution that operates at scale without compromising performance, integrates seamlessly into existing workflows and platforms, and provides actionable insights for continuous improvement.

This is where Tiger Analytics’ Snowflake Native Data Quality Framework comes into play, leveraging Snowflake’s unique capabilities to address these challenges effectively.

Tiger Analytics’ Snowflake Native Data Quality Framework – An Automated and Scalable Solution

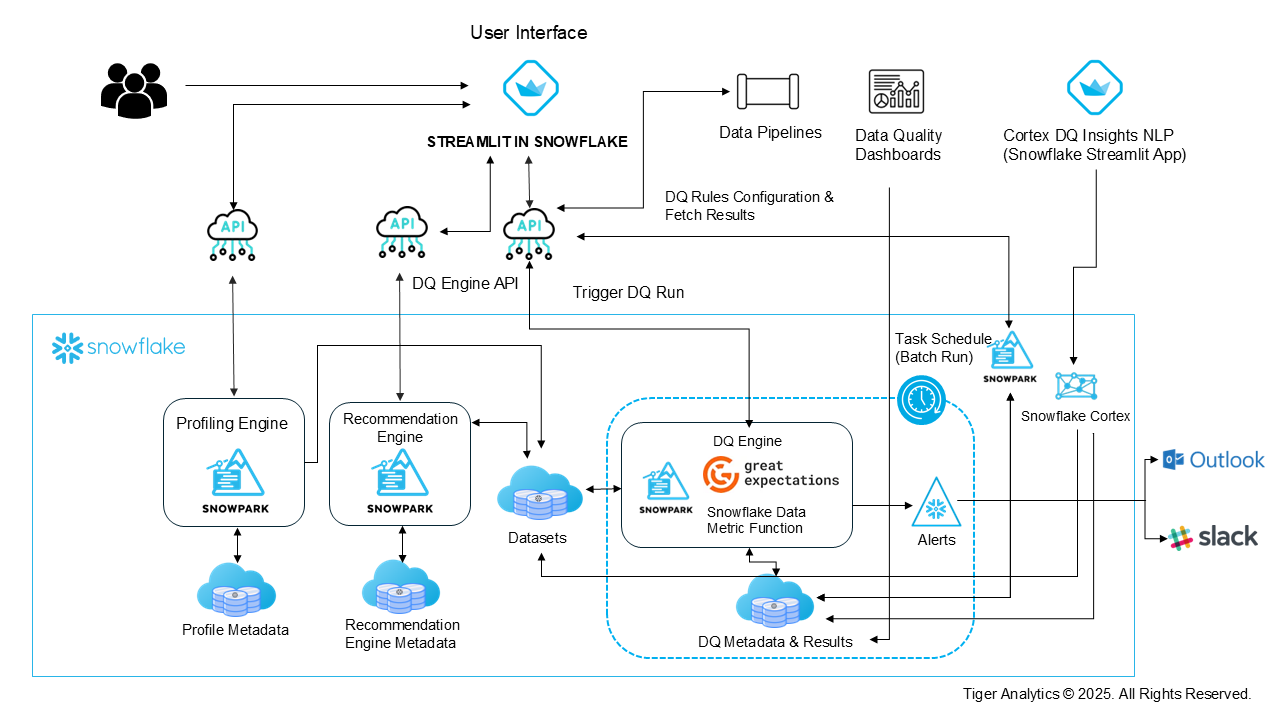

At Tiger Analytics, we created a custom solution leveraging Snowpark, Great Expectations (GE), Snowflake Data Metric Functions, and Streamlit to redefine data quality processes. By designing this framework as Snowflake-native, we capitalize on the platform’s capabilities for seamless integration, scalability, and performance.

Snowflake’s native features offer significant advantages when building a Data Quality (DQ) framework, addressing the evolving needs of data management and governance. These built-in tools streamline processes, ensuring efficient monitoring, validation, and enhancement of data quality throughout the entire data lifecycle:

- Efficient Processing with Snowpark:

Snowpark lets users run complex validations and transformations directly within Snowflake. Its ability to execute Python, Java, or Scala workloads ensures that data remains in place, eliminating unnecessary movement and boosting performance. - Flexible and Predefined DQ Checks:

The inclusion of Great Expectations and Snowflake Data Metric Functions enables a hybrid approach, combining open-source flexibility with Snowflake-native precision. This ensures that our framework can cater to both standard and custom business requirements. - Streamlined Front-End with Streamlit:

Streamlit provides an interactive interface for configuring rules, schedules, and monitoring results, making it accessible to users of all skill levels. - Cost and Latency Benefits:

By eliminating the need for external tools, containers, or additional compute resources, our framework minimizes latency and reduces costs. Every process is optimized to leverage Snowflake’s compute clusters for maximum efficiency. - Integration and Automation:

Snowflake’s task scheduling, streams, and pipelines ensure seamless integration into existing workflows. This makes monitoring and rule execution effortless and highly automated.

Tiger Analytics’ Snowflake Native Data Quality Framework leverages Snowflake’s ecosystem to provide a scalable and reliable data quality solution that can adapt to the changing needs of modern businesses.

Breaking Down the Tiger Analytics’ Snowflake Native Data Quality Framework

- Streamlit App: A Unified Interface for Data Quality

Serves as a centralized front-end, integrating multiple components of the data quality framework. It allows users to configure rules and provides access to the profiler, recommendation engine, scheduling, and monitoring functionalities – all within one cohesive interface.

This unified approach simplifies the management and execution of data quality processes, ensuring seamless operation and improved user experience

- Data Profiler

Data profiler automatically inspects and analyzes datasets to identify anomalies, missing values, duplicates, and other data quality issues directly within Snowflake. It helps generate insights into the structure and health of the data, without requiring external tools.

It also provides metrics on data distribution, uniqueness, and other characteristics to help identify potential data quality problems

- DQ Rules Recommendation Engine

The DQ Rules Recommendation Engine analyzes data patterns and profiles to suggest potential data quality rules based on profiling results, metadata, or historical data behavior. These recommendations can be automatically generated and adjusted for more accurate rule creation.

- DQ Engine

The DQ Engine is the core of Tiger Analytics’ Snowflake Native Data Quality Framework. Built using Snowpark, Great Expectations, and Snowflake Data Metric Functions, it ensures efficient and scalable data quality checks directly within the Snowflake ecosystem. Key functionalities include:

- Automated Expectation Suites:

The engine automatically generates Great Expectations expectation suites based on the configured rules, minimizing manual effort in setting up data quality checks. - Snowpark Compute Execution:

These expectation suites are executed using Snowpark’s compute capabilities, ensuring performance and scalability for even the largest datasets. - Results Storage and Accessibility:

All validation results are stored in Snowflake tables, making them readily available for monitoring, dashboards, and further processing. - On-Demand Metric Execution:

In addition to GE rules, the engine can execute Snowflake Data Metric Functions on demand, providing flexibility for ad hoc or predefined data quality assessments. This combination of automation, scalability, and seamless integration ensures that the DQ Engine is adaptable to diverse data quality needs.

- Automated Expectation Suites:

- Scheduling Engine

The Scheduling Engine automates the execution of DQ rules at specified intervals, such as on-demand, daily, or in sync with other data pipelines. By leveraging Snowflake tasks & streams, it ensures real-time or scheduled rule execution within the Snowflake ecosystem, enabling continuous data quality monitoring.

- Alerts and Notifications

The framework integrates with Slack and Outlook to send real-time alerts and notifications about DQ issues. When a threshold is breached or an issue is detected, stakeholders are notified immediately, enabling swift resolution.

- NLP-Based DQ Insights

Leveraging Snowflake Cortex, the NLP-powered app enables users to query DQ results using natural language, providing non-technical users with straightforward access to valuable data quality insights. Users can ask questions such as below and receive clear, actionable insights directly from the data.

- What are the current data quality issues?

- Which rules are failing the most?

- How has data quality improved over time?

- DQ Dashboards

These dashboards offer a comprehensive view of DQ metrics, trends, and rule performance. Users can track data quality across datasets and monitor improvements over time. It also provides interactive visualizations to track data health. Drill-down capabilities provide in-depth insight into specific issues, allowing for more detailed analysis and understanding.

- Data Pipeline Integration

The framework can be integrated with existing data pipelines, ensuring that DQ checks are part of the ETL/ELT process. These checks are automatically triggered as part of the data pipeline workflow, verifying data quality before downstream usage.

How the Framework Adds Value

As organizations rely more on data to guide strategies, ensuring the accuracy, consistency, and integrity of that data becomes a top priority. Tiger Analytics’ Snowflake Native Data Quality Framework addresses this need by providing a comprehensive, end-to-end solution that integrates seamlessly into your existing Snowflake environment. With customizable features and actionable insights, it empowers teams to act quickly and efficiently. Here are the key benefits explained:

- End-to-End Solution: Everything from profiling to monitoring is integrated in one place.

- Customizable: Flexibly configure rules, thresholds, and schedules to meet your specific business requirements.

- Real-Time DQ Enforcement: Maintain data quality throughout the entire data lifecycle with real-time checks.

- Seamless Integration: Fully native to Snowflake, integrates easily with existing data pipelines and workflows.

- Actionable Insights: Provide clear, actionable insights to help users take corrective actions quickly.

- Scalability: Leverages Snowflake’s compute power, allowing for easy scaling as data volume grows.

- Minimal Latency: Ensures efficient processing and reduced delays by executing DQ checks directly within Snowflake.

- User-Friendly: Intuitive interface for both technical and non-technical users, enabling broad organizational adoption.

- Proactive Monitoring: Identify data quality issues before they affect downstream processes.

- Cost-Efficiency: Reduces the need for external tools, minimizing costs and eliminating data movement overhead.

Next Steps

While the framework offers a wide range of features to address data quality needs, we are continuously looking for opportunities to enhance its functionality. We at Tiger Analytics are exploring additional improvements that will further streamline processes, and increase flexibility. Some of the enhancements we are currently working on include:

- AI-Driven Recommendations: Use machine learning to improve and refine DQ rule suggestions.

- Anomaly Detection: Leverage AI to detect unusual patterns and data quality issues that may not be captured by traditional rules.

- Advanced Visualizations: Enhance dashboards with predictive analytics and deeper trend insights.

- Expanded Integration: Explore broader support for hybrid cloud and multi-database environments.

A streamlined data quality framework redefines how organizations ensure and monitor data quality. By leveraging Snowflake’s capabilities and tools like SnowPark, our Snowflake Native Data Quality Framework simplifies complex processes and delivers measurable value.